Coursera certainly deserves praise for opening access to higher education, especially since they also offer courses in the humanities, unlike competitors like Udacity or EdX. Solutions to exercises in mathematics or computer science can easily be graded because there is an expected correct answer at undergraduate level courses, but assessing the relative merits of an essay in, say, literary studies isn’t quite so straightforward. Coursera attempts to solve this problem by letting students grade the essays of their peers.

I do see some issues with automated grading even in code submissions, but that’s a topic for another article. Right now I am more concerned with the peer review system Coursera has implemented. I am sure they will attempt to modify their system eventually, but at the moment there are some serious issues. Please note that I am not speaking as an observer on the sidelines. I have sampled numerous courses, and finished finished three so far. Especially in more technical courses, the content seems to be very good, and for a motivated self-learner you could easily substitute a course at a brick-and-mortar university by one of Coursera’s, if you are more concerned about learning something new and care little about getting a paper.

On the other hand, the humanities courses don’t seem to fare that well. You’d really have to lower your expectations. I’ll talk about the shortcomings in a moment, but before that, let’s review the ambitions Coursera has for their peer review process:

Following the literature on student peer reviews, we have developed a process in which students are first trained using a grading rubric to grade other assessments. This has been shown to result in accurate feedback to other students, and also provide a valuable learning experience for the students doing the grading. Second, we draw on ideas from the literature on crowd-sourcing, which studies how one can take many ratings (of varying degrees of reliability) and combine them to obtain a highly accurate score. Using such algorithms, we expect that by having multiple students grade each homework, we will be able to obtain grading accuracy comparable or even superior to that provided by a single teaching assistant.

What actually happens is that every student has to evaluate five essays to receive feedback on his own work, which in turn also gets evaluated by five other students. Your score is merely an average of the scores you have received from other students in the course. Scoring is done according to various rubrics, reflecting the criteria of the assignment. Let’s say you were asked to discuss events that happened in a certain time period. If you did that, you were supposed to get one point, if not, you got zero. Most of the rubrics were quite surprising if your assumption was that people writing those essays actually paid attention to the directions. It turned out that many didn’t. I read a few essays that were completely unrelated. The bar seems to be rather low.

An article on Inside HigherEd recently pointed out some issues with Coursera’s peer review system. I do think that the author had a rather narrow perspective, despite raising awareness of some issues with the nature of the feedback you receive from other students. She pointed out that feedback was highly variable, ranging from a “good job!” to downright abusive language. Further, feedback is one-directional, and there is no way to comment on feedback or challenge a perceived unfair assessment. Given the current state of Coursera with low-quality feedback being the norm, I am tempted to view this as a feature and not a bug. Yet, in a traditional university, a great part of the learning experience consists in discussing your work with your supervisor.

The other issues Inside HigherEd mention go hand in hand: Anonymity of feedback is not necessarily a problem, but the associated lack of community is. In many courses, the forum have a low signal to noise ratio. Of course, this won’t matter if someone finds it deeply gratifying to express “me too!” statements in poor English. I’d imagine that most exchanges via text messages or on Twitter follow exactly this pattern. Personally, I saw little value in threads in which over 100 people reveal which country they are from, though.

But let’s look at methodological problems of the crowd review system Coursera uses. The assumption is that a random number of students is perfectly able to objectively assess the works of others, despite their own shortcomings. This itself is a rather daring hypothesis that hardly passes the laugh test since it presupposes that all students, once they submit their essays, turn into omniscient and objective evaluators who are able to detect the kind of mistakes they made before their role switched from student to peer reviewer. Further down I’ll present an argument that is supposed to show that crowd review can’t even work in a best case scenario, but for now I’ll focus on what I perceive to be the current reality on Coursera.

Providers of MOOCs focus on vanity metrics like the number of “enrollments.” It seems that no matter what kind of course is launched, eventually between 50,000 and 100,000 students sign up. But this number itself is highly inflated. It’s common that not even half the students finish the first week’s assignment. This is not because they are all unmotivated or realize that they had bitten off more than they can chew. Instead, “enrolling” is the only way to sample the course content. If you just wanted to have a look, you’d have to register first. I am fairly certain that if, for instance, the first week of lectures and assignments was available immediately, then the big drop off in numbers could be largely avoided. This would be a straightforward solution, but how impressive would it be if Daphne Koller and Andrew Ng had to tell their investors that some recent changes lowered the number of enrollments by 50%? This is not just an issue of Coursera or Udacity. Twitter and Facebook are also full of inactive accounts, in addition to fake ones. Yet, yet their reported total number of users is rarely questioned in the press.

[EDIT: The world of MOOCs is moving fast. Coursera has very recently begun making all video lectures of about a dozen courses available without registration.]

After week one about half the students are left. This will still be an enormous amount of people. Yet, it doesn’t mean that they are all automatically well-qualified. “Opening up” higher education brings this to light, and only reveals educational deficits. I am far from putting the blame on the students. Many first-world countries rather invest in their military or propping up a global fantasy economy instead of creating equal opportunities by investing in schools or libraries. Therefore, it is little surprise that quite a few of the people who are active on the forums, or participate in the peer review process, would seem quite out of place at a proper university. Given that all it takes to sign up is an email address, this is to be expected.

Unfortunately, an immediate effect is the relatively low quality of the discussion forums, which I had touched upon before. If you skim a few threads, you’ll be amazed at the widespread lack of etiquette, and often poor grammar and orthography. It’s not as bad as a random collection of comments on YouTube, but at worst it’s quite close. As the courses go on, it normally gets better, but the first two or three weeks normally make you want to stay away from online discussions.

The rather diverse student body has ramifications for crowd reviewing as a whole. Put five students with a poor command of English in a room, tell them to grade the each others’s work, and the result won’t be especially awe-inspiring. While this example may sound absurd, it is a rather common occurrence on Coursera. Of course, if you only want to check for basic writing ability, then you can just run a grammar and spell checker. I don’t want to sound condescending, but about half the students whose essays I read did’t even bother with this. But if people can’t even meet this goal, then it’s arguably too much to ask for coherent arguments.

As Coursera expands, I can only see this getting worse, because September will never end. Without strong moderation, the quality of sites degrades. However, since strong moderation isn’t conducive to growth, good sites sooner or later degenerate. It’s probably only a matter of time until people will post “First!” or “+1” on a thread where someone asks a question about algorithmic efficiency.

Finally, let’s consider a best case scenario. One may want to think that in an ideal world you could have stellar students grading the work of other equally stellar students. It’s quite obvious that they would be able to give each other much more meaningful feedback. Yet, they don’t know what they don’t know. A motivated student will therefore learn much more from interacting with a tutor who is able to make him consider a different point of view. In fact, one of the benefits of attending a university course that focuses on writing is that you can discuss your work with someone who is more experienced and more educated. Of course, not all professors are, but at the better universities you should find a lot of smart professors and highly competent tutors. It will take significant breakthroughs in AI to replicate something similar.

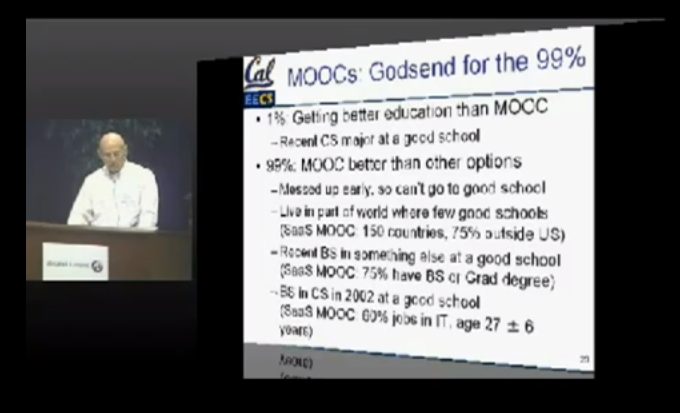

In summary, it seems that in a best-case scenario Coursera will stunt the growth of motivated students. This ties in with a recent talk by David Patterson, where he stated that MOOCs are a “godsend for the 99%” since they were better than other options. The “1%” were CS majors at good schools, and their education was undeniably superior to what a MOOC could provide. He was referring to his own Software as a Service course, which was originally offered through Coursera, but is now available on EdX.

Patterson was referring to a computer science course. In the humanities, though, a lot has to change until a similar claim could be made. The worst case scenario I described, which seems rather close to the reality on Coursera, has quite some room for improvement. I am tempted to say that this is an enormous problem, and I don’t see how the goals of “opening up higher education” in the humanities and raising standards can be achieved. In the end there may be the realization that high school education has to be fixed before MOOCs can reach their full potential. We’ll have to see how well Khan Academy will be doing on that front, but it certainly looks promising.

Fascinating article, especially given how long ago you wrote this, and that virtually none of these issues have been addressed.

I am currently taking a course on Coursera which has a social science angle, so room for interpretation, and it’s the first I’ve done that has a peer review component.

I fully agree with your assessment about the poor quality of students doing the review, but have witnessed something altogether more concerning. Since the automated assignment of reviews to others doesn’t appear to work well, students are encouraged to post in the forums to ask others to review their work, by providing a link. This has essentially become the only purpose of the forums.

Having reviewed a sample of about 10, I observed the following:

– only one actually submitted an assignment meeting the basic criteria. This is without considering the quality of the analysis.

– almost half were attempts that surely took no more than a few minutes, failed to include the basics of what was requested, and didn’t show any sign that the learner had watched or read any of the course content up to that point.

– the remainder, a good 50%, were not actually course submissions at all. They were random attachments: an application for an Erasmus programme, a McKinsey business report, a blank page, and so on. These were not shown to me randomly, but from posts on the forums.

Given the number of forum posts requesting high marks and also accompanied by promises to ‘review you with full grades’, I can only conclude that peer review on Coursera is becoming an implicit social contract to pass another student in return for the same favour, regardless of what was submitted. I’ve reported all cases to Coursera using their tools to do so, but who knows what action is taken.

In the process, I’ve become disheartened with what I felt was a good platform. I primarily do the courses for learning, of course, but have purchased the certificates as evidence of my work and to support the university offerings. It’s certainly made me think twice about the validity of Coursera certificates when I see them on the CVs and LinkedIn profiles of others.

100%. Excellent article.

With a Masters Degree in Business from a first world university, I signed up for a Coursera HR Management Certificate under the direction of Jako Lok of Macquarie University Australia, as I thought it might by useful given my career trajectory.

While course content and associated assignments were excellent, the peer review process was appalling.

It seemed my ‘peers’ hardly spent any time or effort in reading assignments, let alone answering them and most had a very poor standard of English. Given the low quality of answers, it was very difficult to mark their assignments positively according to the rubric, but I did, despite misgivings.

My assignments were completed seriously, thoughtfully and thoroughly. After about the third assignment, I was failed by one of these learned ‘peers’. Knowing this was completely unfair, having marked ‘the competition’ I appealed to Jako Lok by email.

I received an automated response offering me the option to redo the module or leave.

Needless to say I saw no need to continue paying Coursera or Macquarie, both of which have irreparably damaged their reputations by condoning ‘bullying’ behaviour and issuing essentially ‘fraudulent’ certificates.

Avoid.